Using RAID with the Debian-Installer

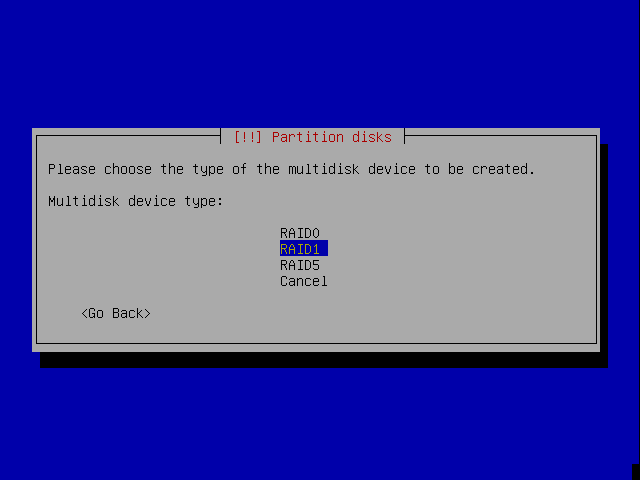

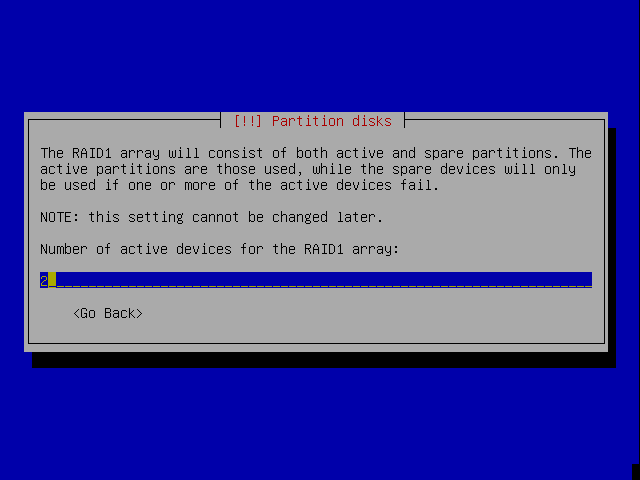

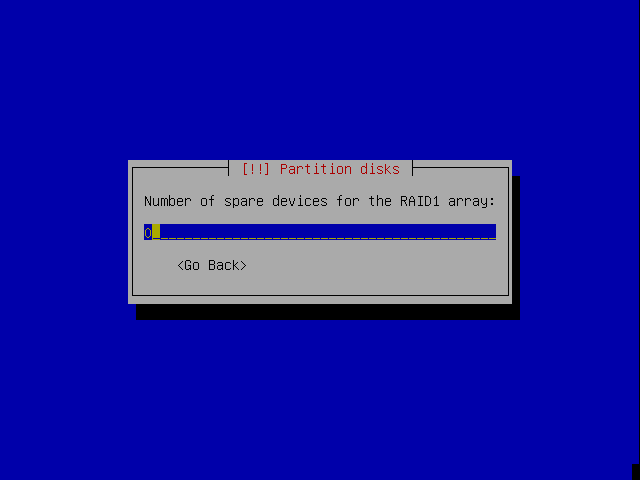

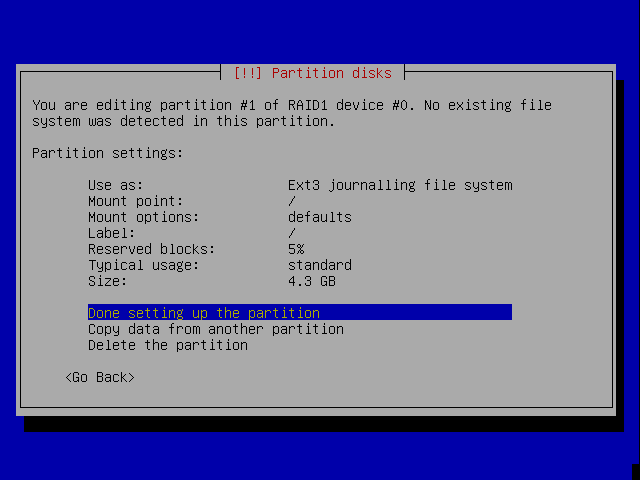

The following is a short graphical guide to creating Linux software RAID in RC2 of the Debian-Installer. A RAID1 device is created, consisting (in the interest of brevity) of a single disk partition. The device is formatted as ext3, and used as the system's root filesystem.

The information in this document is specific to Debian GNU/Linux 3.1 "Sarge" on i386.

Creating a RAID volume

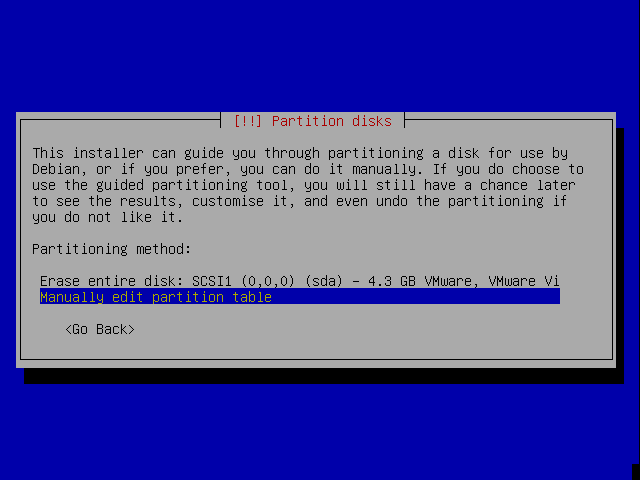

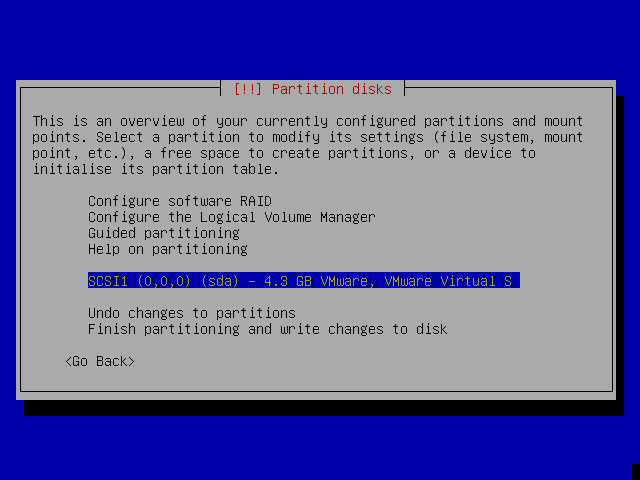

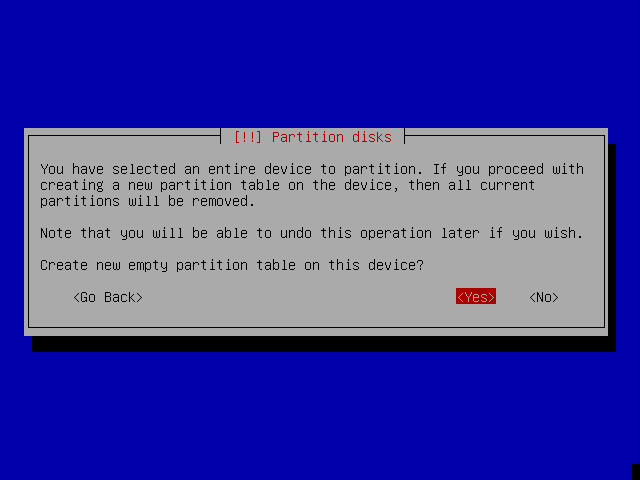

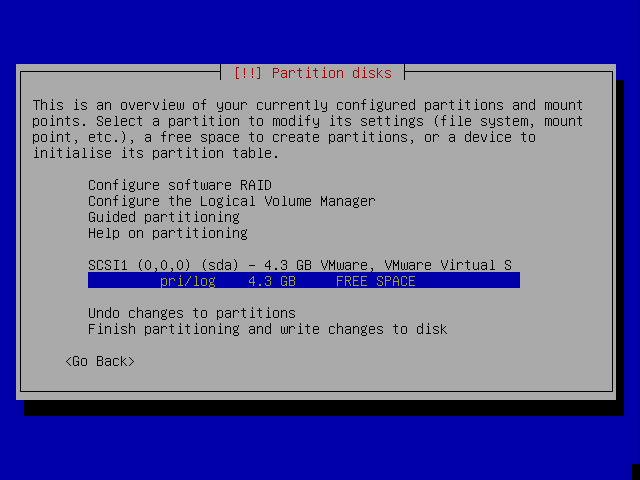

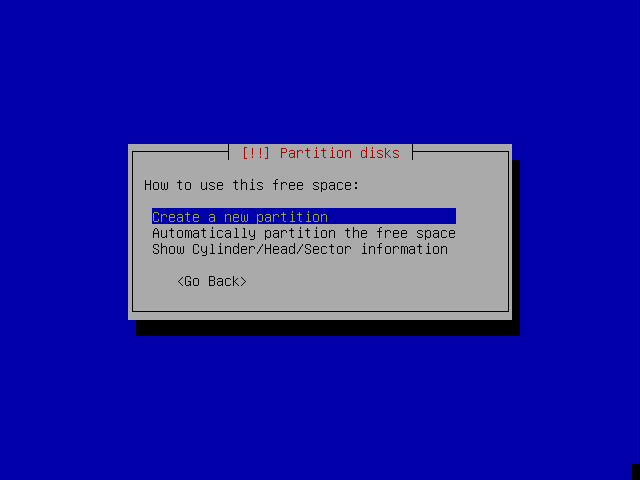

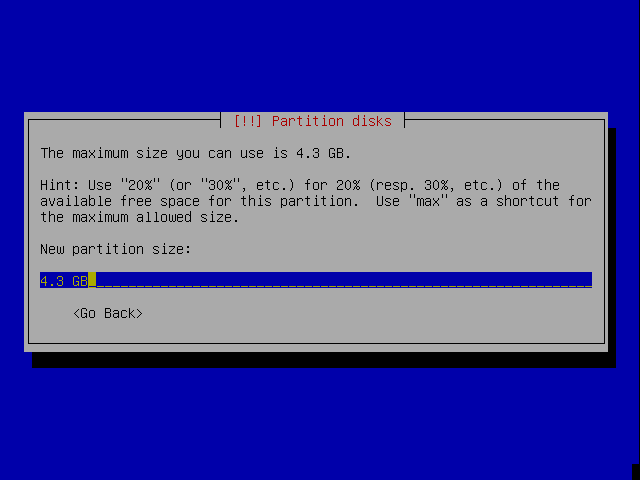

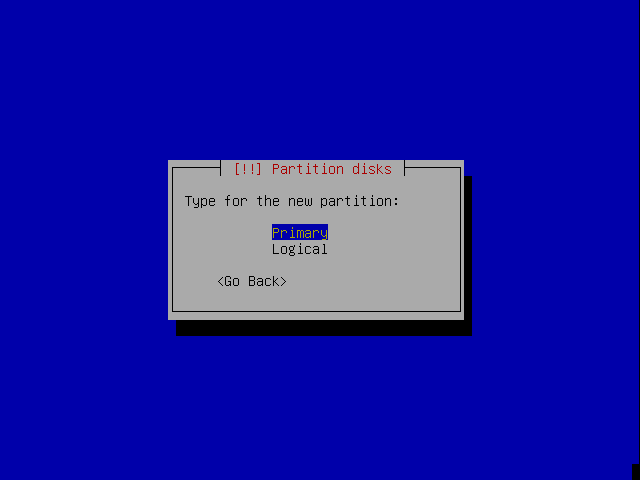

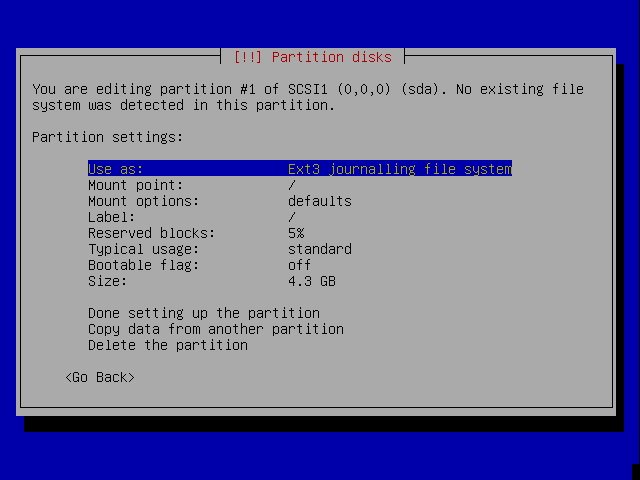

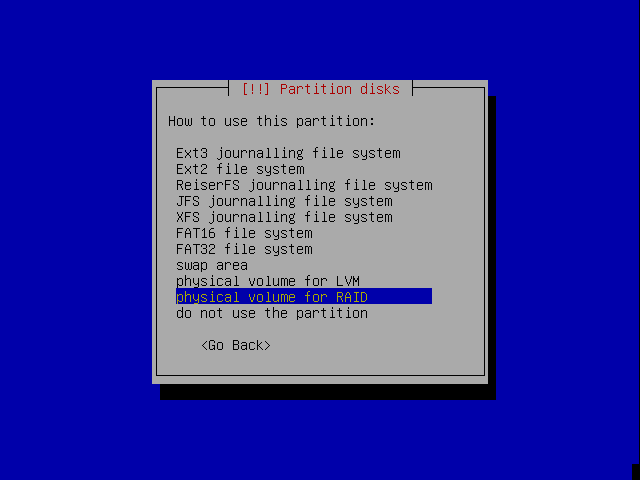

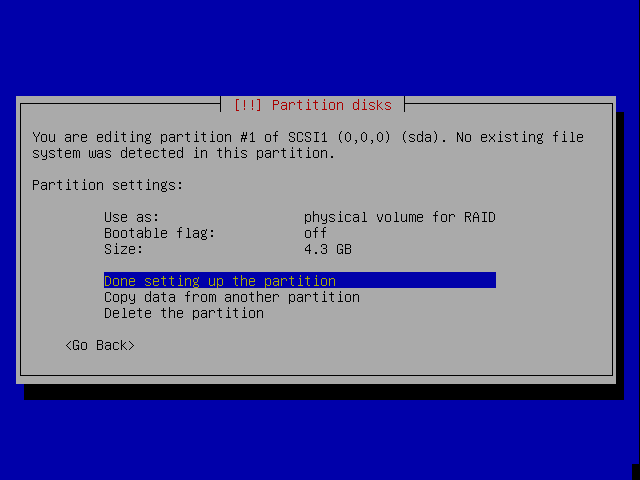

Create a number of partitions, and tell the installer that you wish to use each one as a "physical volume for RAID".

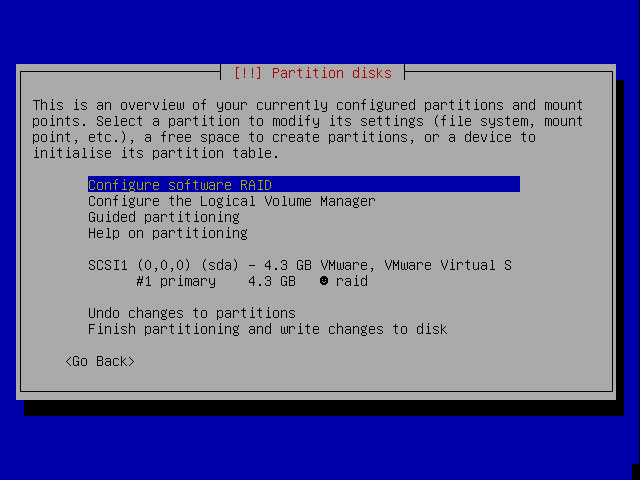

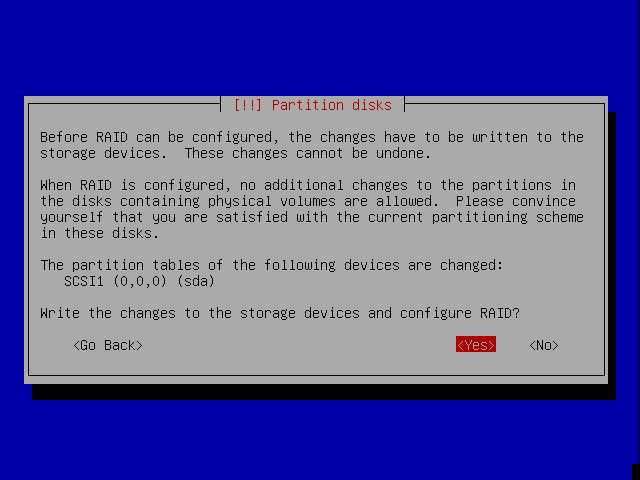

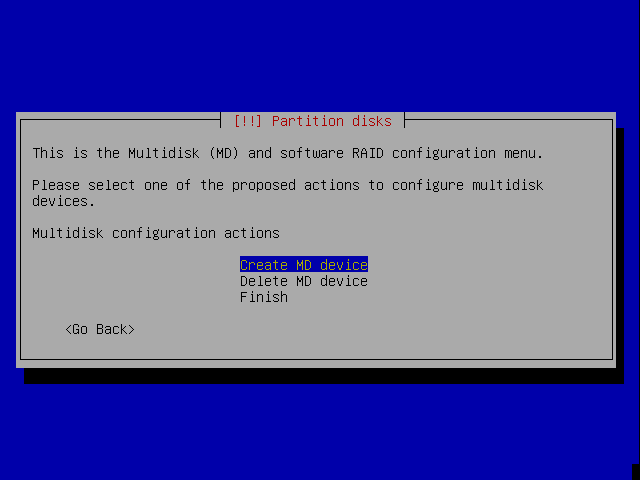

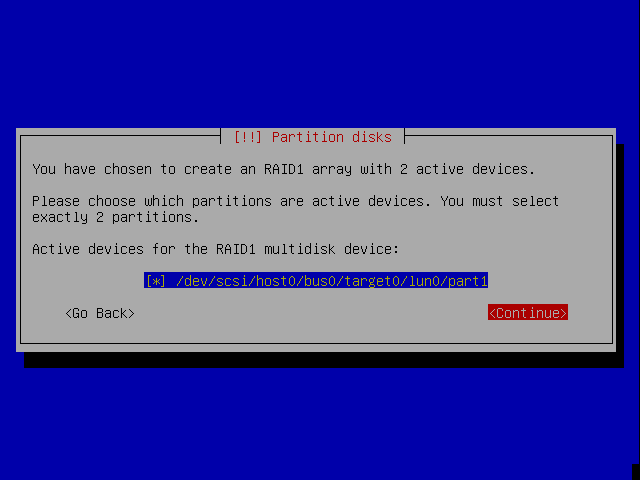

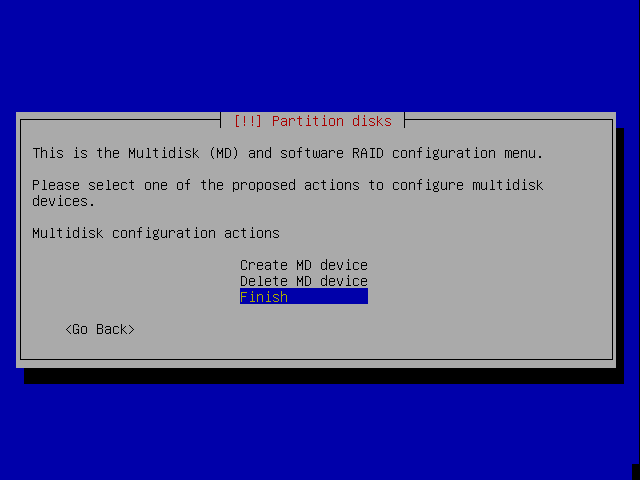

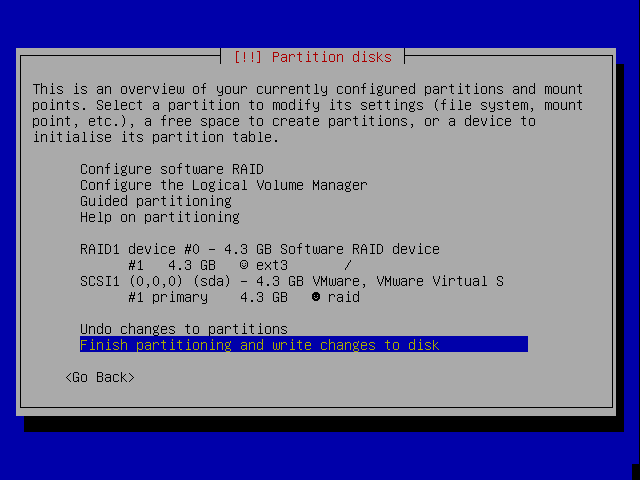

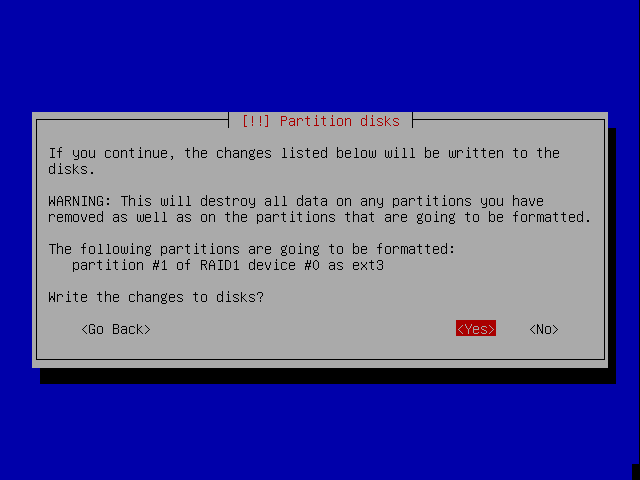

Once all such partitions have been created, select "Configure software RAID". You will then be able to create a RAID "logical volume" consisting of one or more RAID physical volumes.

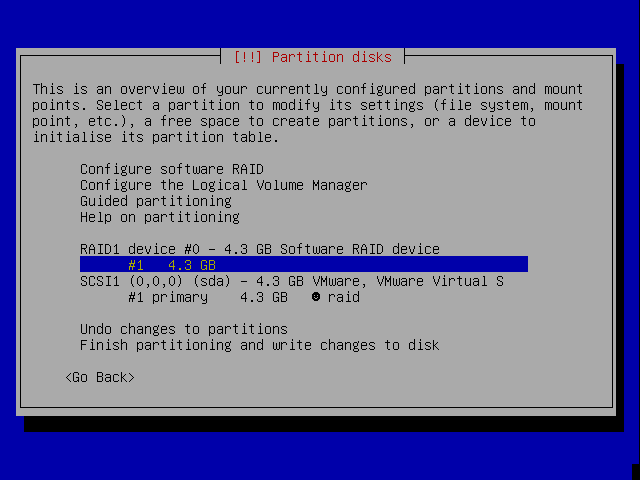

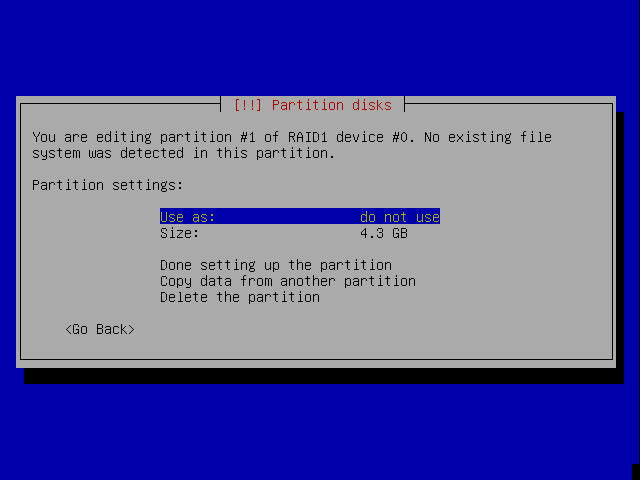

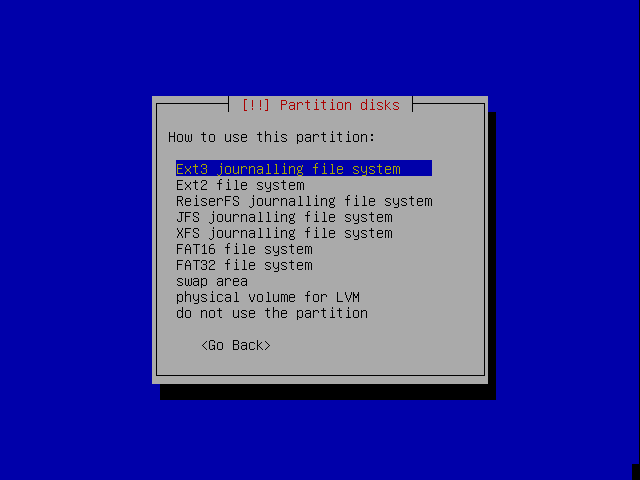

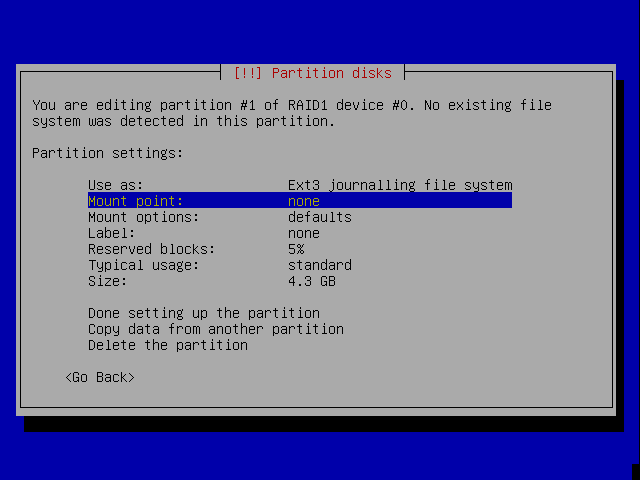

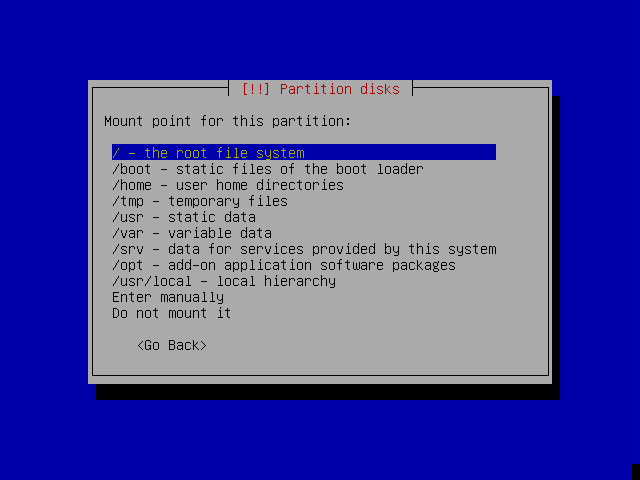

The logical volume will now show up in the list of disks and partitions, and can be configured to be formatted and mounted as if it were a standard partition.

On booting

This exact system will probably boot successfully. Hopefully this page will dispel some of the lore currently circulating concerning booting from a RAID system.

Theory of booting

When a PC comes to life, it reads and executes code from the first 512 bytes of the first hard disk. This area is known as the 'Master Boot Record'. PC BIOSes are usually very stupid—many will only examine the first disk on the system.

The code that is loaded is known as the 'boot loader'1. Its job is to load the Linux kernel into memory and run it[1]. Sarge on i386 ships with two boot loaders: GNU GRUB (the default) and LILO.

Like the BIOS that loaded it, the boot loader does not understand RAID, LVM, EVMS or other elaborate volume management schemes. Therefore in the general case the kernel can not be loaded from a RAID volume. Instead, it is common to create a small partition for these files, mounted into the /boot directory2.

However, a RAID 1 volume has the interesting property of not actually affecting how data is layed out on a disk. Therefore, a boot loader should be able to load the kernel from a RAID 1 volume without complications.

Practice of booting

Out of the box, Debian Sarge will almost manage booting from a RAID volume. There are four caveats:

The order of the physical volumes that comprise your RAID volume must be similar across your disks. For example, md0 should consist of hda2, hdc2 and sda2. While you can create a RAID volume from any set of block devices, dissimilar partition layouts will mean a lot of hand-hacking menu.lst when you attempt to account for the following two points.

The GRUB setup scripts get confused if /boot exists on a RAID volume, and default to specifying groot=(hd0,0) in /boot/grub/menu.lst. If hda1 is not a member of your RAID volume then you must point groot at a partition that is a member, and run update-grub to update the boot menu options at the bottom of the file. Update: It looks like this might have been 292274, which was fixed with the upload of grub 0.95+cvs20040624-14 on the 4th of February, 2005.

By default, GRUB is only installed on the MBR of the first hard disk. If this disk fails, you will not be able to boot from the others unless you install GRUB onto each one manually. You can do this by running grub and entering the following:

device (hd0) /dev/path/to/linux/disk root (hd0,*grub-partition-number*) setup (hd0)

For example, to install GRUB on hdc, such that it loads the kernel from hdc6:grub> device (hd0) /dev/hdc grub> root (hd0,5) Filesystem type is ext2fs, partition type 0xfd grub> setup (hd0) Checking if "/boot/grub/stage1" exists... yes Checking if "/boot/grub/stage2" exists... yes Checking if "/boot/grub/e2fs_stage1_5" exists... yes Running "embed /boot/grub/e2fs_stage1_5 (hd0)"... 16 sectors are embedded. succeeded Running "install /boot/grub/stage1 (hd0) (hd0)1+16 p (hd0,5)/boot/grub/stage2 /boot/grub/menu.lst"... succeeded Done.

The device command tells GRUB to treat the specified hard disk as if it were (hd0)—that is, the first disk detected by the BIOS at boot time. GRUB 2 has support for installing onto and reading Linux Software RAID (and LVM) devices! More information can be found at LVMandRAID - GRUB Wiki.

GRUB 2 has support for installing onto and reading Linux Software RAID (and LVM) devices! More information can be found at LVMandRAID - GRUB Wiki. Unfortunatly, the version of initrd-tools that comes with Sarge generates initrds that do not detect RAID volumes very robustly. It will only use devices that were part of the array when mkinitrd is run (by default, at kernel installation time). If you alter the layout of your RAID array, you must rebuild your initrd. 269727 and 272034 track this problem.

Patches for some of these issues are available from bug 251905.

Often, other files are required to boot the system, such as the initrd, and GRUB's configuration file menu.lst. "Loading the kernel" should be read as including these additional files. (1)

In the past, it was necessary to have a separate /boot partition for another reason: the 1024 cylinder limit. Although modern PCs do not suffer from such a limitation, the practice still lives on. (2)

Sources

RHEL3 Reference Guide: A Detailed Look at the Boot Process